CONCACE (home)

Table of Contents

1. Home

1.1. Objective

To tackle challenges posed by extensive scale and dimensionality in both model- and data-driven applications, we'll leverage contemporary development tools and languages to craft high-level expressions of advanced parallel numerical algorithms. While conventional high-performance computing (HPC) strategies emphasize maximizing hardware utilization, our alternative approach will enable a richer composability, thereby harnessing the full potential of both existing and novel numerical algorithms.

1.2. Project

- Project-team title: COmposabilité Numérique et parallèle pour le CAlcul haute performanCE

- Acronym: concace (see also administrative reference resource)

1.3. Partners

The concace project is a joint Inria-Industry team involving members from two private partners, namely, Airbus Central R&T and Cerfacs.

The permanent members possess extensive expertise in parallel computational science, each emphasizing distinct aspects within computer science and applied mathematics. The team comprises individuals from varied academic and professional backgrounds, rendering it diverse and multidisciplinary, with a broad range of skills and knowledge.

This joint initiative was strongly motivated by the common scientific interests and their shared view of research actions.

1.4. Team Introduction Video

1.5. Non Technical Team Presentation (EN / FR)

1.5.1. English (EN)

1.6. Activity Reports

You can check out our activity reports.

1.7. Internal (private)

- Internal

- Source of this page

- This page may be browsed in html or pdf

2. Members

2.1. Permanent members

- Emmanuel Agullo, research scientist, Inria

- Flavie Blondel, team assistant, Inria

- Pierre Benjamin, research project leader modelling & simulation, Airbus Central R&T

- Olivier Coulaud, senior research scientist, Inria

- Luc Giraud, senior research scientist, Inria

- Sofiane Haddad, senior scientist modelling & simulation, Airbus Central R&T

- Carola Kruse, senior researcher, Cerfacs

- Paul Mycek, senior researcher, Cerfacs

- Guillaume Sylvand, Research Project Leader Modelling & Simulation, Airbus Central R&T

2.2. Post-docs

- Marwin Lasserre, Inria-Airbus Central R&T

- Yanfei Xiang, Inria

2.3. PhD students

- El-Mehdi Attaouchi, Edf, from Mar. 2022

- Théo Briquet, Inria from Nov. 2023

- Antoine Gicquel, Inria - ANR Diwina, from Nov. 2023

- Atte Torri, LISN (co-advised with O. Kaya, LISN), from Dec. 2021

- Amine Zekri, Laplace (co-advised with J.R. Poirier, Laplace), from Nov. 2023

2.4. Pre-doctoral students

- Hugo Dodelin, Inria (05/24-09/24)

2.6. Interns

2.7. External collaborators

- Oguz Kaya, LISN, Saclay, Associate Professor

- Jean René Poirier (HDR), INP Toulouse, Associate Professor

- Ulrich Rüde, Friedrich-Alexander-Universität, Erlangen-Nürnberg, Germany and Cerfacs

2.8. Former members

- Marek Felsoci, Inria-Airbus Central R&T, PhD, 2019-2023, manuscript available here

- Pierre Esterie, Inria engineer, 2019-2023

- Karim Mohamed El Maarouf, PhD with IFPEN, 2019-2023, manuscript soon available

- Martina Iannacito, Inria, PhD, 2019-2022, manuscript available here

- Antoine Jego, IRIT (co-advised with A. Buttari (CNRS) and A. Guermouche (Topal team), PhD 2020-2023, manuscript available here

- Romain Peressoni, Inria/Région, PhD 2019-2021, manuscript available here

- Maksym Shpakovych , Inria-Airbus Central R&T, post-doc 2021-2022

- Nicolas Venkovic, Cerfacs, PhD 2018-2022

- Yanfei Xiang, Inria-Cerfacs, PhD, 2019-2022, manuscript available here

3. Job offers

3.1. Master positions with possible continuation in a PhD

- Solving sparse linear systems using Krylov's modular and adaptive mixed-precision methods, collaboration with LIP6 in the context of the PEPR Numpex, for more details see here

- Backward stability analysis of numerical linear algebra kernels using normwise-perturbation, collaboration with LIP6 in the context of the PEPR Numpex, for more details see here

- Composability of execution models, for more details see here

- Unsupervised learning using spectral approaches, in collaboration with IRIT, for more details see here

- Abstraction of Krylov-type subspace methods, for more details see here

- Unification of hierarchical methods for linear systems processing, for more details see here

- Fault-tolerant numerical iterative algorithms at scale, collaboration with ENS Lyon in the context of the PEPR Numpex, for more details see here

- An apprentice position @ Airbus (in Issy-Les-Moulineaux) to work on scientific compûting on GPU, for students starting M2 in september here

4. Research

4.1. Context

Over the past few decades, there have been innumerable science, engineering and societal breakthroughs enabled by the development of high performance computing (HPC) applications, algorithms and architectures. These powerful tools have enabled researchers to find computationally efficient solutions to some of the most challenging scientific questions and problems in medicine and biology, climate science, nanotechnology, energy, and environment – to name a few – in the field of model-driven computing.

Meanwhile the advent of network capabilities and IoT, next generation sequencing, … tend to generate a huge amount of data that deserves to be processed to extract knowledge and possible forecasts. These calculations are often referred to as data-driven calculations.

These two classes of challenges have a common ground in terms of numerical techniques that lies in the field of linear and multi-linear algebra. They do also share common bottlenecks related to the size of the mathematical objects that we have to represent and work on; those challenges retain a growing attention from the computational science community. Get onboard and Enter the Matrix with Us!

4.2. Objective

In this context, the purpose of the concace project, is to contribute to the design of novel numerical tools for model-driven and data-driven calculations arising from challenging academic and industrial applications. The solution of these challenging problems requires a multidisciplinary approach involving applied mathematics, computational and computer sciences. In applied mathematics, it essentially involves advanced numerical schemes both in terms of numerical techniques and data representation of the mathematical objects (e.g., compressed data, low-rank tensor, low-rank hierarchical matrices). In computational science, it involves large-scale parallel heterogeneous computing and the design of highly composable algorithms.

Through this approach, the concace project intends to contribute

across all stages ranging from the design of new robust and accurate

numerical schemes to the flexible implementation of the

corresponding algorithms for modern supercomputers. To address these

research challenges, researchers from Airbus Central R&T, Cerfacs and

Inria have decided to combine their skills and research efforts

within the concace project-team.

The project will enable us to address the complete range of issues, spanning from fundamental methodological considerations to thorough validations on demanding industrial test cases. Collaborating on this joint project will foster genuine synergy between fundamental and practical research, offering complementary advantages to all participating partners.

5. Software

- Avci: Adaptive Vibrational Configuration Interaction is a shared memory C++ software that computes vibrational spectra of molecules.

- cppdiodon: it is a joint software development with the PLEIADE team, whose goal is to propose efficient methods from laptop to supercomputer for the main linear dimension reduction methods for learning purposes on massive data sets. It is based on fmr and may be accelerated with

chameleon. - fabulous: Fast Accurate Block Linear krylOv Solver is a software package that implements Krylov subspace methods with a particular emphasis on their block variants for the solution of linear systems with multiple right-hand sides.

- fmr: Fast and accurate Methods for Randomized numerical linear algebra.

- h-mat: Herarchical-matrix is a way to store and manipulate matrices in a hierarchical and compressed way. The sequential version of the library is available as open-source software (GPL v2) on github), the full-featured parallel version is proprietary, owned by Airbus Central R&T.

- maphys++: maphys++ supersedes the maphys (Massively Parallel Hybrid Solver) software package that implements parallel linear solvers coupling direct and iterative approaches.

- Celeste: it is a C++ library for Efficient Linear and Eigenvalue Solvers using TEnsor decompositions. This library is co-developed with O. Kaya from LISN.

- scalfmm: Scalable Fast Multipole Method is a tool to compute interactions between pairs of particles using the fast multipole method.

6. Collaborations

6.1. National initiatives

- High Performance Spacecraft Plasma Interaction Software

- Acronym: HSPIS

- Duration: 2022 - 2024

- Funding: ESA

- Coordinator: ONERA

- Partners: Airbus DS, Artenium, Inria

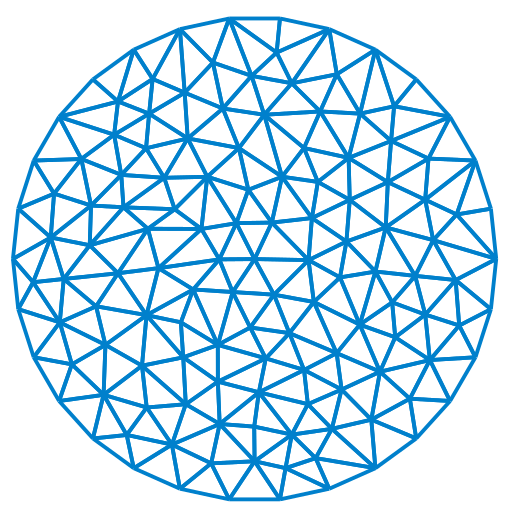

- Summary: Controlling the plasma environment of satellites is a key issue for the nation in terms of satellite design and propulsion. Three-dimensional numerical modelling is thus a key element, particularly in the preparation of future space missions. The SPIS code is today the reference in Europe for the simulation of these phenomena. The methods used to describe the physics of these plasmas are based on the representation of the plasma by a system of particles moving in a mesh (here unstructured) under the effect of the electric field which satisfies the Poisson equation. ESA has recently shown an interest in applications requiring complex 3D calculations, which may involve several tens of millions of cells and several tens of billions of particles, and therefore in a highly parallel and scalable version of the SPIS code.

- Massively parallel sparse grid PIC algorithms for low tempertaure plasma simulations

- Acronym: Maturation

- Duration: 2022 - 2026

- Funding: ANR

- Coordinator: Laplace

- Partners: IMT, Inria, Maison de la simulation

- Summary: the project aims at introducing a new class of PIC algorithms with an unprecedented computational efficiency, by analyzing and improving, parallelizing and optimizing as well as benchmarking, in the demanding context of partially magnetized low temperature plasmass through 2D large scale and 3D computations, a method recently proposed in the literature, based on a combination of sparse grid techniques and PIC algorithm.

- Magnetic Digital Twins for Spintronics : nanoscale simulation platform

- Acronym: Diwina

- Duration: 2022 - 2026

- Funding: ANR

- Coordinator: Institut Néel

- Partners: CMAP, Inria, Spintec

- Summary: The DiWiNa project aims at developing a unified open-access platform for spintronic numerical twins, ie, codes for micromagnetic/spintronic simulations with sufficiently-high reliability and speed so that they can be trusted and used as reality. The simulations will be bridged to the advanced microcopy techniques used by the community, through plugins to convert the statics or time-resolved 3D vector- fields into contrast maps for the various techniques, including their experimental transfer functions. To achieve this, we bring together experts from different disciplines to address the various challenges: spintronics for the core simulations, mathematics for trust, algorithmics for speed, experimentalists for the bridge with microscopy. Practical work consists of checking the time-integration stability of spintronic torque involved in the dynamics when implemented in the versatile finite-element framework, improve the calculation speed through advanced libraries, build the bridge with microscopies through rendering tools, and encapsulate these three key ingredients into a user-friendly Python ecosystem. Through open-access and versatile user-friendly encapsulation, we expect that this platform is suited to serve the needs of the entire physics and engineering community of spintronics. The platform will be unique in its features, ranging from simulation to the direct and practical comparison with experiments. It will contribute to reduce considerably the number of experimental screening for the faster development of new spintronic devices, which are expected to play a key role in energy saving.

- Low rank tensor decomposition for fast electromagnetic modeling of electrical engineering devices

- Acronym: tensorVIM

- Duration: 2022 - 2026

- Funding: ANR

- Coordinator: Laplace

- Partners: G2ELab, Inria

- Summary: This project aims to develop powerful calculation tools for the rapid implementation of electromagnetic simulations of electrical engineering devices using volume integral methods. These techniques based on a tensor writing of the numerical resolution have recently been applied to integral methods and make it possible to reduce the complexity of the calculations and to avoid an increase in the dimension.

6.2. European initiatives

- A network for supporting the coordination of High-Performance Computing research between Europe and Latin America

- Acronym: RISC2

- Duration: 2021 - 2023

- Funding: H2020

- Coordinator: atlantis Inria

- Partners: orschungzentrum Julich GMBH (Germany), {{inriai}} (France), Bull SAS (France) , INESC TEC (Portugal), Universidade de Coimbra (Portugal), CIEMAT (Spain), CINECA (Italy), Universidad de Buenos Aires (Argentina), Universidad Industrial de Santander (Columbia), Universidad de le Republica (Uruguay), Laboratorio Nacional de Computacao Cientifica (Brazil), Centro de Investigacion y de Estudios Avanzados del Instituto Politecnico Nacional (Mexico), Universidad de Chile (Chile), Fundacao Coordenacao de Projetos Pesquisas e Estudos Tecnologicos COPPETEC (Brazil), Fundacion Centro de Alta Tecnologia (Costa Rica)

- Summary: Recent advances in AI and the Internet of things allow high performance computing (HPC) to surpass its limited use in science and defence and extend its benefits to industry, healthcare and the economy. Since all regions intensely invest in HPC, coordination and capacity sharing are needed. The EU-funded RISC2 project connects eight important European HPC actors with the main HPC actors from Argentina, Brazil, Chile, Colombia, Costa Rica, Mexico and Uruguay to enhance cooperation between their research and industrial communities on HPC application and infrastructure development. The project will deliver a cooperation roadmap addressing policy- makers and the scientific and industrial communities to identify central application areas, HPC infrastructure and policy needs.

8. Contact

8.1. Join us

8.1.1. Scientific leaders

In our collaborative effort, the leadership team comprises representatives from Airbus Central R&T, Cerfacs and Inria. Join our co-leaders:

8.1.2. Team assistant

- Flavie Blondel (mailto:flavie.blondel@inria.fr)

8.2. Visit us

Our team is distributed across three locations, working collaboratively to achieve our goals.

8.2.1. Bordeaux Team @ Inria

The Bordeaux Team is located in the main building of the Inria Research Center at the University of Bordeaux, on Talence campus.

Inria Research Center at the University of Bordeaux

200 Avenue de la Vieille Tour

33405 Talence

8.2.2. Paris Team @ Airbus Central R&T

The Paris Team is located in the Airbus CRT/VPE building of Issy-Les-Moulineaux.

Airbus CRT/VPE

22 Rue du Gouverneur Général ÉBOUÉ,

Bât. Colisée EDEN - 4th Floor,

92130 Issy-Les-Moulineaux

.jpg)